Artificial intelligence has many facets and applications. As read in the article “Artificial Intelligence: Fundamental Principles”, one of the concepts used in this field is computer vision. Computer vision is defined as the ability for a computer to replace the human eye, process incoming information and, if required, create an appropriate response. To achieve a motion sensing system, the input information will need to be a video. A video is a rapid succession of images creating an illusion for the human eye and brain of seeing an animation.

Today’s computers are very efficient, but analysing video content is a tremendous information processing effort. For example, a 1-minute video running at 60 frames per second (FPS) with a 1920x1080 resolution requires a validation of over 2 million pixels for every image. If we want to detect movement, i.e. identify differences between two successive images, it will require the validation of over 4 million pixels. This process represents over 250 million mathematical operations per minute, and that is only to detect differences between two successive images. The amount of information to be processed significantly exceeds the processing power of computers currently offered on the market.

Researchers in computer vision have created many different processes and algorithms to reduce the number of required operations and achieve real-time motion detection. For more clarity, we will analyse a real-life example. In this context, a digital screen will display the face of a person walking by a camera.

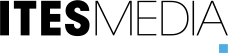

The incoming data is the video taken from a person’s camera. The objective is to isolate the person in the video and determine the direction he is going. For example, let’s take this three-second video:

Figure 1. Video of a passer-by

Image segmentation from a video

Running at 60 frames per second, this means the video has a total of 180 successive images for a 3-second period. To significantly reduce the number of mathematical operations, we will use the first and the last images, along with 2 images per second, for a total of 8 images. The analysed images are as follow:

Figure 2. Image segmentation at every 500 milliseconds from a video

Logical masking

For the next operation, we will compare, in order, the differences between two sequenced images. As seen previously, an image in this format has over 2 million pixels. To significantly reduce the number of operations and identify differences between two images, we will determine the sections in the starting image that require validation. To cover the entire image, a mosaic-shaped mask will identify the areas that need to be validated in the image. Only areas identified by the mask will be validated between images; thus, we will be able to create a set of areas that illustrate changes between two sequences.

Figure 3. Logical mosaic on an image to compare successive images

Comparing two images

By applying this method on two successive images, we are able to identify the differences occurring in the green areas. Differing content in the green areas between the first two images can be identified. These differences will be illustrated as red areas.

Figure 4. Illustration of observed differences between two images

Analysing and understanding movement

To have a complete illustration of the observed movement, the validation operation between the successive images must be applied on all other images for analysis and processing. Let’s use as an example the results of the comparison between the first and the second image and between the second and the third image.

Figure 5. Comparison results between images 1 and 2 and between images 2 and 3

As shown by the results of the image comparison, by analysing the coordinates of the red areas, we are able to mathematically determine that the movement (what we have defined as the ‘Zone of interest’) goes from the left to the right. We see in the first comparison that the red areas appear from the second column of the mosaic, whereas in the second comparison, the red areas start at the third column, thus leading us to this conclusion. Having the data about this perceived movement, it would possible, through preconfigured algorithms, to create a reaction to this movement. In cases where the camera would also be equipped with a dynamic display, we could show an avatar looking at the passerby and following him with its eyes, for example.

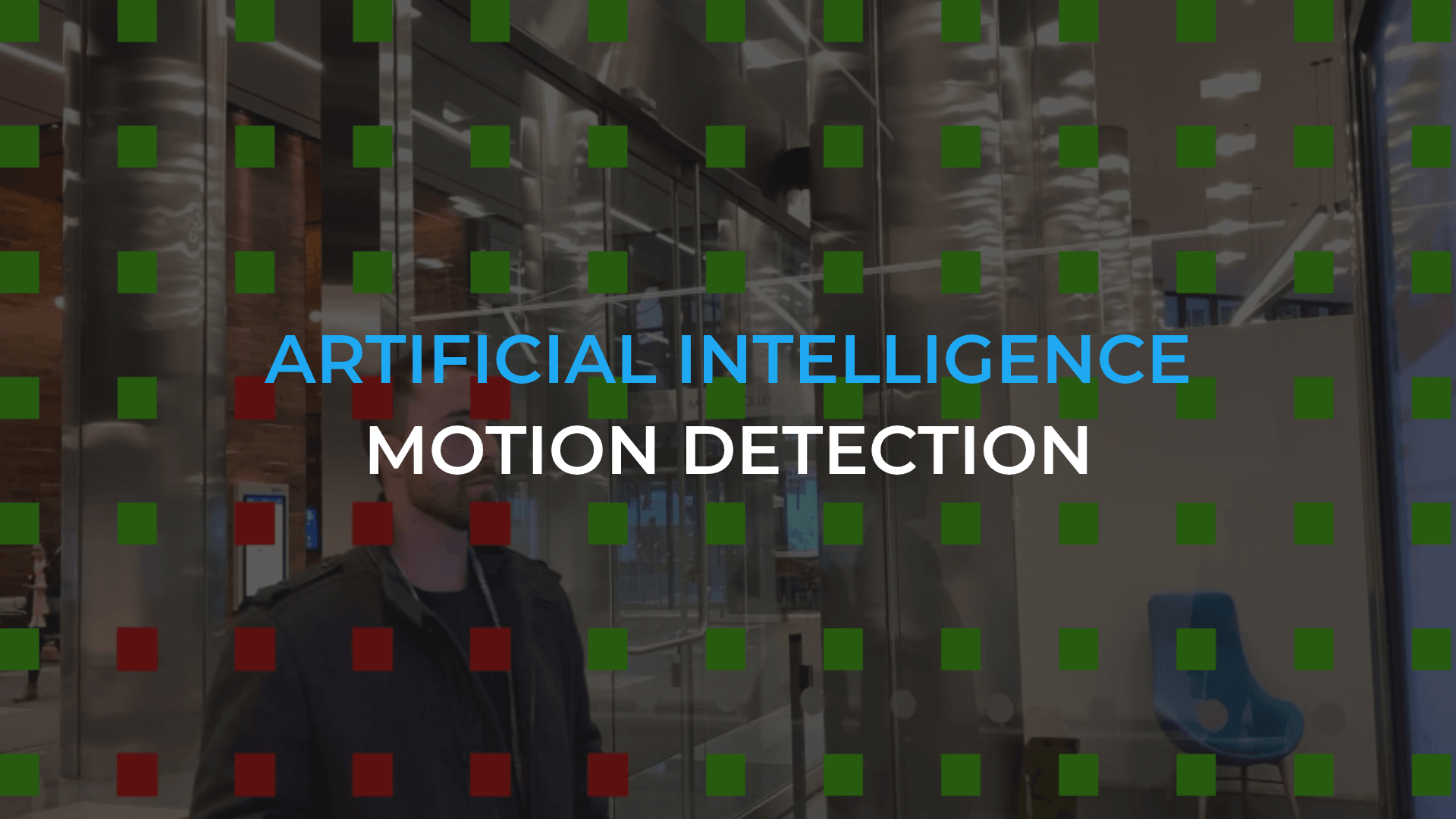

Dynamic display signage, combined with computer vision, can lead to significant innovations. Interactions on touchscreens will be interpreted as movement which will be able to execute commands. An evolving sector that is at the dawn of a new era brings both exciting changes and multiple challenges – two ingredients that fuel innovation and passion for product developers. This demonstration of motion detection is but the tip of the iceberg of an innovative future. Computer vision will be the key to better understand audiences’ reactions before a dynamic display, improve content customization, develop human-machine interactions, and much more!

*Multimedia content source

ITESMEDIA – Case study – Industrielle Alliance