Artificial intelligence is increasingly prevalent in all areas of our society. Definitions and terms are varied in marketing initiatives and strategies aiming at product positioning, but the fundamental concept can be summarized as the ability to transform a machine and give it the faculty to reason as intricately as do human cognitive abilities. To reach this goal, researchers in cognitive computing must both master software programming and study the biological functioning of the human brain, its interpretation of the five senses (sight, hearing, touch, taste and smell) and the appropriate response to the captured information.

Figure 1. Facial recognition through concepts of computer vision

The five senses are information sensors for humans. This information is then sent to the brain and analyzed by the neural network. Once the analysis is complete, the brain decides on a reaction according to the information received. For example, when a human is watching a dynamic display showing the number of available spaces in a parking, sight is the sense being used. The information sensor is the eyes, which capture the information displayed on the screen. Information about the number of available parking spaces are then sent to the brain for analysis. If the brain concludes that there are no more available parking spaces, it tells the body to keep moving to another parking area; if it comes to another conclusion, its command will be to go in the parking and park the vehicle.

Figure 2. Representation of a neural network in the shape of a human brain

From a more technical standpoint, the fundamental difference between traditional algorithms and artificial intelligence is that the algorithm is a sequence of mathematical operations leading to a given result. In artificial intelligences, traditional algorithms are used, but the results depend on a massive set of data. This method therefore allows a temporal adaptability in the algorithm behavior. For example, forecasting a medical clinic’s staffing needs based on the occupancy rate of a waiting room will show different results depending whether it is summer or winter, since winter months have much higher rates of diseases and viruses such as the flu. Computer systems can carry out these types of analysis without having to rely on artificial intelligence. In this scenario, the added value of artificial intelligence would be installing cameras that could read apparent symptoms in each patient through computer vision concepts. When the doctor makes his diagnosis, it will be possible to create a link between visible symptoms and their associated illness. Therefore, there is the idea of capturing visual information, interpreting what was perceived, and confirming the actual situation by doctors. This chain of operations helps the machine to learn from the correct results based on its observations; this concept is called ‘Machine learning’. When an appropriate amount of data has been collected and validated, the machine will be able to make its own preliminary diagnoses and provide suggestions (for example, isolating people who might have the flu to avoid contaminating patients who are in the waiting room because they sprained their ankle).

This chain becomes very complex, and will have multiple steps. It is therefore important to set the goal we wish to reach through artificial intelligence right from the start. Once this objective is defined, data and information gathering will be relevant to the desired goal, which will represent the recommendation or the action the system will undertake.

Figure 3. Representation of an information processing network

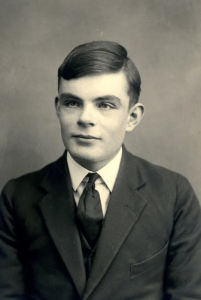

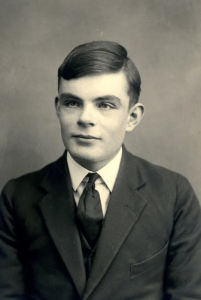

The basic concept of artificial intelligence was expressed by the inventor of the processor, Alan Turing, during his work with the British army during World War II. The concept of evaluating the level of an artificial intelligence (better known in the field as the “Turing test”) is very simple: a human communicating with a machine must not be able to realize that he is talking to a machine. There as never been proof that this test has been failed since the invention of the computer. However, with the evolution of the processing power of computers, along with continuing developments in artificial intelligence, we might one day see a team of researchers create a machine that will not only be able to perfectly impersonate a person, but also fool actual humans in thinking they are communicating with a fellow human being.

Figure 4. The inventor of the processor: Alan Turing

References

Britannica - Alan Turing - British mathematician and logician. Retrieved November 19, 2020 from: https://www.britannica.com/biography/Alan-Turing

The Next Web - A glossary of basic artificial intelligence terms and concepts. Retrieved November 19, 2020 from: https://thenextweb.com/artificial-intelligence/2017/09/10/glossary-basic-artificial-intelligence-terms-concepts/